Most search teams still evaluate SEO and paid search separately.

As a result, decisions are often based on:

This creates false certainty.

In reality, organic and paid results compete and complement each other on the same SERP, for the same user intent.

SEO–SEA experiments exist to measure that interaction objectively, over time, at query level.

To avoid confusion, an SEO–SEA experiment is not:

SEO–SEA experiments do not attempt to prove that paid search replaces organic traffic, or vice versa.

They exist to measure incrementality and efficiency, not channel dominance.

SEO–SEA experiments solve this by testing both channels together, under controlled conditions.

Not all keywords are eligible for SEO–SEA experiments.

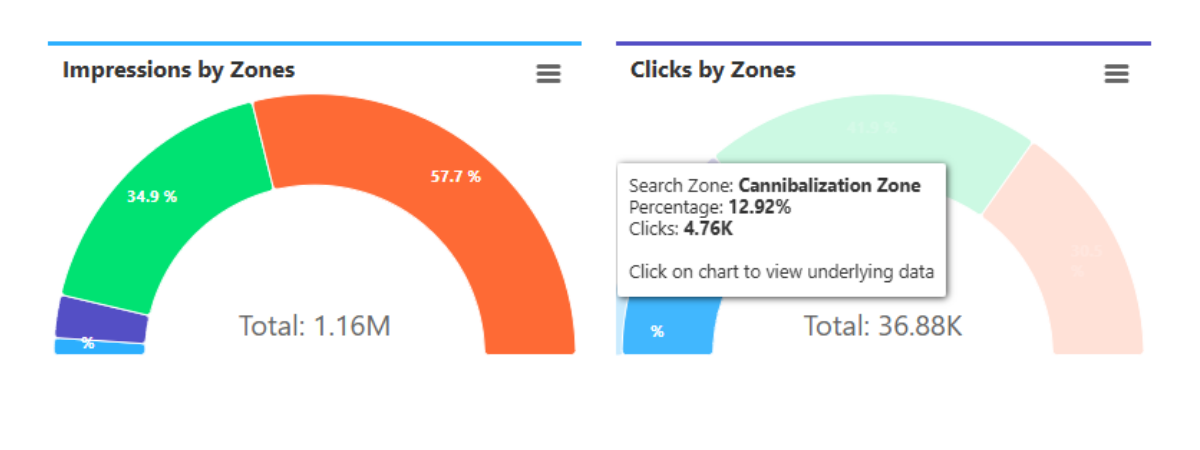

The Cannibalization Zone is the subset of queries where paid and organic visibility overlap enough to justify controlled testing.

According to the automated methodology, the Cannibalization Zone typically includes keywords that meet all of the following conditions:

These are the queries where paid spend is most likely substituting organic demand, rather than expanding it.

Outside this zone, cannibalization tests are statistically unreliable or strategically irrelevant.

SEO–SEA experiments are always run at keyword level, not at campaign or page level.

Each month, a limited set of cannibalization candidates is generated automatically based on strict eligibility criteria:

Only keywords that satisfy all conditions simultaneously enter the test pool.

This prevents biased experiments and protects overall performance.

The most common SEO–SEA experiment types include:

Paid search is used to identify high-cost queries. Organic performance is tested to determine whether paid spend adds net incremental value.

Winning paid ad messages are tested as organic titles and descriptions to validate CTR impact.

Paid traffic is used to test new pages or content formats before long-term SEO investment.

Paid visibility is systematically reduced to observe how organic performance responds over time.

Cannibalization cannot be measured with short pauses or static comparisons.

A valid SEO–SEA cannibalization experiment follows a standardized, repeatable lifecycle.

This structure ensures results reflect behavioral reality, not temporary effects.

A successful SEO–SEA cannibalization experiment shows:

When these conditions are met, paid spend can be reduced or reallocated without harming growth.

Incrementality is proven when efficiency improves while total demand capture is preserved.

SEO–SEA experiments require explicit success and failure rules.

Failed keywords are automatically reactivated in paid search to protect performance.

SEO–SEA experiments only work when results are visible and comparable.

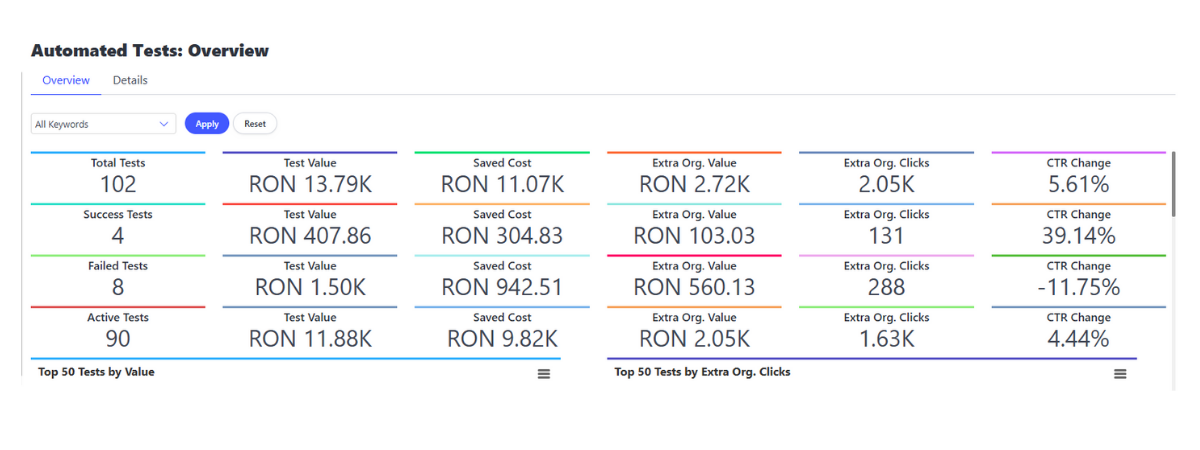

Dedicated dashboards provide:

These dashboards allow teams to evaluate experiments daily, not retrospectively.

Proper SEO–SEA experiments:

They are designed to remove assumptions, not reinforce them.

SEO–SEA experiments only create value when they are:

In practice, this requires combining Google Ads and Google Search Console data and evaluating results over time.

ClimbinSearch operationalizes this approach by running automated SEO–SEA cannibalization experiments on top of search analytics, enabling performance and SEO teams to make defensible budget and strategy decisions based on observed incrementality, not intuition.

As search results become more crowded and budgets more constrained, intuition is no longer sufficient.

SEO–SEA experiments provide a way to:

SEO–SEA experiments are not a tactic.

They are the only reliable method for measuring incrementality in search.

Traditional SEO–SEA alignment assumes frequent meetings, shared documents, and manual handoffs between teams.

In practice, this rarely works.

SEO and SEA teams operate on different cadences, tools, and success metrics. Coordination becomes dependent on availability, interpretation, and subjective judgment. As a result, decisions are delayed, diluted, or never implemented consistently.

SEO–SEA experiments remove the need for manual coordination.

In an experimentation-based model, SEO and SEA teams do not need to talk to each other continuously.

They need a shared system.

When both channels feed data into the same experimentation framework:

The system becomes the single source of truth.

SEO and SEA no longer exchange opinions.

They exchange observed outcomes.

In a unified SEO–SEA experimentation system:

This does not remove collaboration.

It removes friction.

ClimbinSearch acts as the unifying layer between SEO and SEA by:

SEO and SEA teams interact with the same dashboards, the same experiments, and the same conclusions, even if they never meet.

Alignment is no longer a process.

It is a property of the system.

As organizations grow, manual coordination does not scale.

Systems do.

SEO–SEA experiments embedded in a shared platform allow teams to:

This is how SEO and SEA stop being parallel functions and become inputs into the same decision engine.