SEO experiments are not a nice to have. They are how you prove impact.

Most SEO teams do not struggle because they lack ideas. They struggle because they cannot prove what worked, why it worked, and whether the result will repeat.

Search is noisy. Demand changes. SERP layouts shift. Competitors publish. Paid budgets move. If you ship changes without a test design, you are often looking at correlation and calling it causation.

Agencies do not win because they ran one good experiment. Agencies win because they built an experimentation workflow that produces measurable progress month after month, even when priorities shift and teams change.

This is exactly what we give our partner agencies in ClimbinSearch. Not dashboards. An operating system for SEO experiments.

In this guide, you will learn what seo experiments are, why they fail in real life, and how agencies can run seo experiments as a repeatable system that compounds category visibility over time.

An SEO experiment is a structured way to answer a single question, such as:

🍊Will rewriting titles to match intent increase clicks for a specific query cluster?

🍏Will adding internal links from high authority pages improve rankings for a category?

🍎Will consolidating two competing pages increase total visibility or kill long tail demand?

To qualify as an experiment, you need five ingredients

1. A clear hypothesis

2. A test group and a control group

3. A change you can describe precisely

4. A time window that can capture a signal

5. A measurement plan tied to the target queries

If you cannot define these, you are not running an experiment. You are shipping an edit and hoping it sticks.

SEO experiments work when they are designed around query clusters, not around isolated pages or single keywords.

SEO experimentation breaks for predictable reasons.

Teams measure pages instead of query clusters. Most pages rank for mixed intent. If you do not measure the segment you targeted, you will not know what moved.

Control groups are not comparable. If your control pages are stronger or weaker at baseline, you are watching variation, not impact.

The work is not operationalized. Experiments live in decks and chats. Two months later, nobody remembers what changed, when it changed, and what success was supposed to look like.

For agencies, this becomes painful fast.

When the client asks what changed this month, the answer becomes a story, not a system.

The real purpose of seo experiments is not to create a one time uplift. It is to build a repeatable loop that produces measurable improvements month after month

When an agency manages SEO, the hardest part is not generating ideas.

The hardest part is staying measurable and consistent through all the real world noise.

😶🌫️Multiple stakeholders

🎩Multiple priorities

👽Development constraints

⛷️Promotions and seasonality

🦁Paid media shifts

🐾And the constant pressure to prove growth in a channel that compounds slowly

So we designed ClimbinSearch to support a specific agency reality.

Partner agencies need a way to define what matters, track it with discipline, and show progress in a way that aligns teams across SEO, SEA, and analytics.

Categorization rules and branded definitions aligned with brand strategy. Before agencies run SEO experiments, they need a segmentation model that reflects how people actually search and how the brand is positioned. Without this, dashboards become blended averages and it is impossible to tell what actually moved.

Partner agencies start by setting up Categorization Rules and a clear branded definition in ClimbinSearch, aligned with the brand strategy and category priorities. This turns raw queries into stable intent clusters, separates branded and non branded demand, and keeps reporting consistent month after month.

Once this governance layer is in place, experiments become measurable. Agencies can evaluate shifts by query segment, connect them to the pages that should own that intent, and understand impact at query level, page level, and category level.

This is the foundation of scalable seo experiments: stable segmentation first, experiments second.

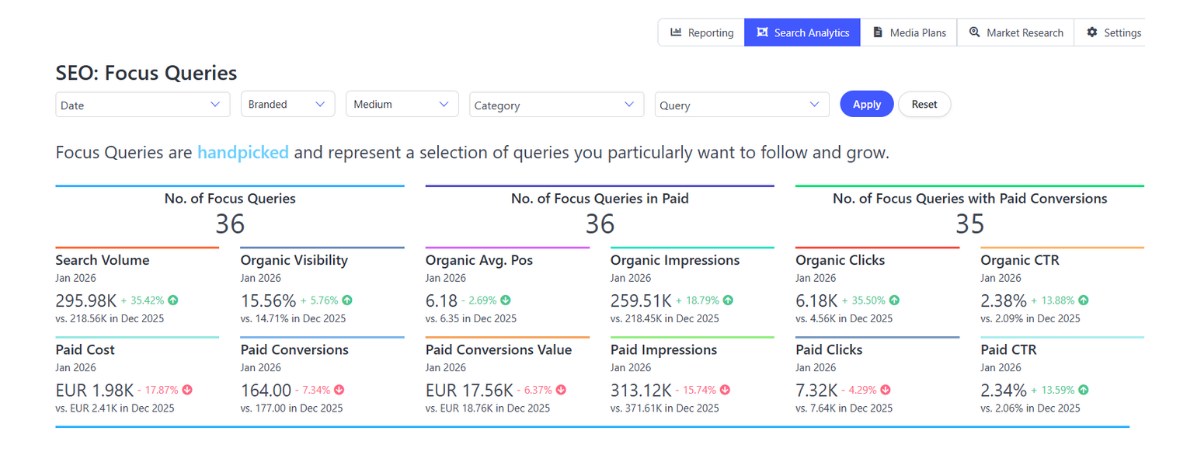

Partner agencies can set up their focus in ClimbinSearch settings so they always follow dashboards that are zoomed into what matters. This creates an always on measurement surface that survives priorities, seasons, and team changes.

Focus Queries and Focus Pages are the anchor. They are the hand picked set a team can consistently track and work with, without getting lost in thousands of keywords and pages. Focus Pages complete the loop by clarifying ownership, so internal linking and content decisions stay coherent, execution stays aligned, and off page efforts reinforce the same intent instead of scattering authority across multiple URLs.

But the objective is never to rank for a handful of terms or pages. The goal is full category impact. The focus set is the zoom in view used to investigate specific cases and validate changes, while the real win is the halo effect that lifts the wider semantic field around them.

In practice, this is how agencies run seo experiments without losing control: focus sets keep the work human and measurable, while dashboards track the full category outcome.

SEO experiments should be evaluated on visibility shifts, not on isolated ranking screenshots. A single page can rank for multiple intents, and a single intent can be captured by multiple pages, so you need a view that matches how search actually behaves.

ClimbinSearch category dashboards show performance by category and intent cluster, then let agencies drill down to the Focus Queries and Focus Pages that explain the movement. This makes it clear where growth comes from and where performance is redistributed instead of improved.

This is how agencies move from reporting to control. They can see what changed, why it changed, and what to do next, because the measurement surface is stable and structured.

If you want to run seo experiments that stakeholders trust, you need dashboards that explain movement at the same level where Google operates: the query level.

Explore the Search Analytics Module https://www.climbinsearch.com/search-analytics-module

Clients do not only want outputs. They want an engine that produces measurable growth through disciplined experimentation. That requires a workflow that can be repeated and improved, not a series of one off initiatives.

ClimbinSearch supports a consistent loop. Define a hypothesis, choose a test and a control group, ship one controlled change, and track results weekly using the same query segments and dashboards. That rhythm keeps experiments comparable and prevents teams from chasing noise.

Over time, experiments compound into a playbook. Learnings become reusable rules, priorities become clearer, and the agency can prove progress month after month with fewer debates and more evidence.

This is what “best practice seo experiments” look like in the real world: a repeatable operating rhythm, not a one time project.

SEO results can be misread when paid media shifts, promotions change demand, or budgets increase coverage on the same queries. If you track SEO in isolation, you can conclude the wrong thing and optimize in the wrong direction.

Partner agencies use ClimbinSearch to interpret SEO in the context of the broader search ecosystem. They track value generating queries, observe how paid coverage affects total search outcome, and avoid false conclusions caused by channel overlap.

When paid search overlaps organic strength, agencies can activate SEA SEO cannibalization automated tests to measure incrementality and decide what paid is truly adding. Methodology here:

https://www.climbinsearch.com/article/seo-sea-experiments-how-to-measure-incrementality-between-organic-and-paid-search

This is why modern seo experiments should include paid context when needed: otherwise the measurement surface is incomplete.

The aim is not to fix SEO cannibalization as a standalone problem. The aim is to improve how visibility shifts and compounds at query level, page level, and category level.

When results look flat, the reason is often redistribution. The same query cluster is split across multiple URLs, Google rotates the ranking URL, clicks move from one page to another, and category performance can look unchanged even when the work is improving the right intent signals.

ClimbinSearch helps agencies monitor this redistribution through categorization, Focus Pages ownership, and stability tracking over time. Then agencies decide whether to consolidate, differentiate scope, or adjust internal linking based on what improves category outcomes, not on a cosmetic “issue fixed” checkbox.

This matters for seo experiments because redistribution can hide improvement. If you do not track distribution, you can misread a good experiment as a failed one.

When you need to measure overlap between paid and organic outcomes for the same query segments, use the SEA SEO cannibalization testshttps://www.climbinsearch.com/sea-seo-automatic-cannibalization-tests

Experiments become stronger when you interpret them in the context of the market. Rankings alone do not tell you whether demand shifted, whether consumers changed language, or whether competition intensified.

For top tier partners, ClimbinSearch adds a quarterly market insights layer based on search behavior. Agencies can understand branded versus non branded demand structure, seasonality, product family traction, and how interest evolves across the category.

This gives experiments context and improves prioritization. It helps agencies focus testing where demand is growing, defend where demand is contracting, and explain performance with credibility instead of assumptions.

SEO experiments are not only about what you changed on the site. They are also about what changed in the market while you were measuring.

They test title rewrites and meta description updates aligned with intent and SERP language to increase clicks without needing ranking gains first. This is common because it is fast, controlled, and measurable at query cluster level.

What they test

They test changes that improve category relevance and user flow, then measure impact on the category query cluster and the halo effect across related searches.

What they test

They test link placement, anchor logic by intent, links from authority pages to focus pages, and hub to spoke structures. Internal linking is one of the most repeatable levers and often the easiest to scale once rules are proven.

Hub to spoke is an internal linking and content structure. Hub is the main page that should own the broad topic or category, the central authority page. Spokes are supporting pages that cover subtopics, long tail intents, comparisons, FAQs, or related product lines.

How it works

What they test

They test consolidation when multiple pages compete for the same query cluster, and measure whether the owner page becomes more stable and the category gains total visibility.

What they test

They test off page efforts as controlled inputs, so authority growth reinforces the same intent and the same focus pages, not scattered URLs.

What they test

They test how paid coverage affects total search outcome on the same query cluster and whether paid is incremental or mostly shifts attribution from organic.

What they test

SEO experiments turn SEO from opinions into evidence, so you can prove what actually drives results.

A structured SEO experiments system: categorization rules and branded definitions aligned with brand strategy, Focus Queries and Focus Pages for governance and ownership, and dashboards that track visibility shifts at query, page, and category level. We also connect SEO with paid context when needed, so experiment interpretation stays accurate.

They run repeatable SEO experiments on the levers that move results: titles and meta descriptions for CTR, category page structure and semantic coverage, internal linking and hub to spoke structures, consolidation to reduce overlap, and off page authority that reinforces the same focus pages. They measure outcomes on the same query clusters over time and turn learnings into the next set of priorities.